In general software development, a Proof of Concept (POC) is a small-scale exercise to test whether a proposed idea or system is feasible — technically, economically, or organisationally — before committing to full-scale development. As a AI Poduct Manager, ll the key decisions like AI as a Product or AI as a Features starts from building a AI POC.

When applied to AI, a AI Proof of Concept (AI PoC) becomes a focused, low-risk experiment to evaluate whether an AI-based solution can actually solve a real business problem and deliver value — without investing excessive time or resources upfront.

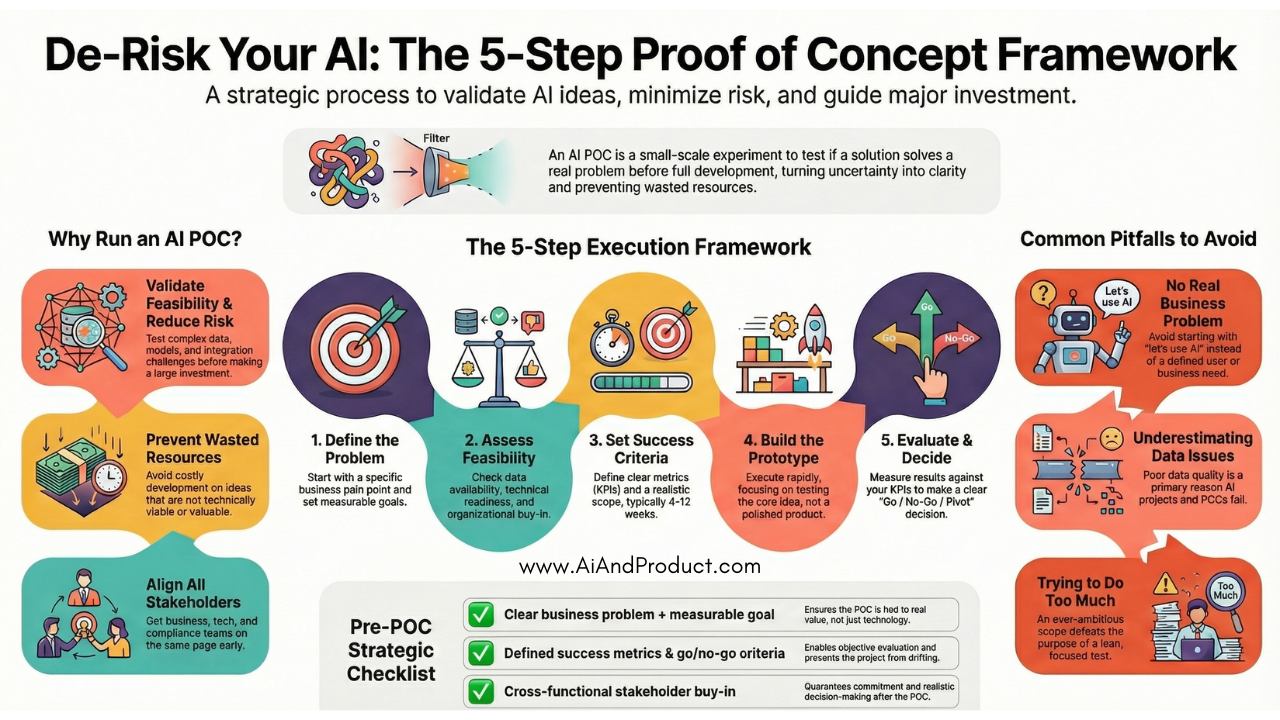

Why run an AI POC?

- Validate feasibility & reduce risk: AI often brings complexity — data requirements, model constraints, integration challenges. A PoC helps verify whether the idea is viable before scaling.

- Avoid wasted resources: Building a full product around an untested AI idea can be costly in terms of time, money, and effort. POCs help avoid such misinvestment.

- Align stakeholders & set expectations: A well-defined AI POC helps business, product, tech, and compliance teams get on the same page — about the problem, constraints, success criteria, and what “done” means.

- Quick decision-making & learning: By isolating the core uncertainty and testing it early with real or representative data, teams can learn — often within weeks — whether to proceed, pivot, or drop the idea.

A Practical Framework: Step-by-Step Process to Decide and Build an AI POC

Based on best practices observed across industry guides and consulting firms, here’s a practical framework you can follow.

Step 1: Discovery & Problem Definition (Why are we doing this?)

- Identify the business problem / pain point — Not AI for AI’s sake. Look for a real challenge: e.g. high manual workload, slow decision-making, error-prone tasks, inefficiencies, or unmet user needs.

- Frame the goal in measurable terms — E.g. “reduce customer churn by 15%,” “automate invoice processing to cut time by 60%,” or “improve document classification accuracy to 90%.”

- Clarify what uncertainty you’re trying to resolve — This could be technical (will AI do the job?), data-related (is data quality sufficient?), or business-related (does this solution really deliver value?).

- Set constraints and boundaries — Time, budget, infrastructure, compliance/regulation, data privacy etc. This helps keep the PoC lightweight and focused.

Step 2: Feasibility & Data Assessment (Can we even do it?)

Once the problem is defined, assess whether it’s actually possible to build an AI solution given your current environment. Key checks:

- Data availability & quality — Is there enough data? Is it accessible? Representative? Clean? Do you have labels, or will you need to label data? Are there compliance / privacy constraints?

- Technical & architectural feasibility — Can your existing systems integrate with an AI module? Can you build a sandbox or prototype environment? What’s the compute / infra requirement?

- Organizational readiness — Do you have cross-functional buy-in (business + tech + stakeholders)? Do you have resources (people, time) allocated? Is there clarity on who “owns” the PoC?

If feasibility checks fail, that’s a legitimate outcome. The PoC helps you discover that risk early — better to know now than after full-scale development begins.

Step 3: Define Success Criteria & Scope (What does “good” look like?)

- Define metrics / KPIs up front — e.g. accuracy, precision/recall, processing time reduction, cost savings, throughput, user satisfaction, error rate drop, etc.

- Set a realistic scope and duration — An AI POC is not a full product. Typical durations range from 4–6 weeks up to 8–12 weeks depending on complexity.

- Document assumptions, risks, constraints — Data issues, scaling challenges, compliance, integration, change management, etc. This helps stakeholders set the right expectations.

- Get stakeholder sign-off — Ensure business, product, tech and other relevant stakeholders agree on success criteria, timeline, budget, roles, and next-step decision gates.

Step 4: Build the Prototype / PoC (Rapid Execution)

With problem, scope, and success criteria in hand — now execute:

- Prepare data — Clean, normalize, label (if needed), ensure data quality and representativeness. Data prep often takes more time than expected.

- Choose appropriate AI technique / model — Based on the problem, data, and goals. Could be simple ML, NLP, computer vision, classical algorithms, or more advanced approaches. Use existing models or frameworks if possible for speed.

- Rapid prototyping & testing — Build a minimal, functional prototype to test core assumptions — not a polished product. Test on real or representative data to get reliable feedback.

Step 5: Evaluate PoC Results — Go / No-Go Decision

- Measure against your pre-defined KPIs — Did the PoC meet (or come close to) the success criteria? E.g. model accuracy, error rate, time saved, cost reduced, user feedback etc.

- Assess technical & business viability — Technical performance is necessary but not sufficient. Also evaluate data maintainability, integration challenges, compliance/legal aspects, scalability prospects.

- Make a decision — Proceed / Pivot / Drop — Based on results and stakeholder alignment. If the PoC succeeds but reveals gaps (e.g. data quality, scaling constraints), you may decide to pivot scope or invest in data/infrastructure first. If it fails, it’s better to recognize it early and redirect resources.

- Document learnings & next steps — Record what worked, what didn’t, lessons learned, and a plan (roadmap) for scaling, pilot, or further experimentation.

Pitfalls & Why Many AI POCs Fail — What to Watch Out For

Even well-intentioned AI POCs often fail — here are common pitfalls and how to avoid them:

- No real business problem / vague goal: If the PoC starts with “let’s try AI” rather than a defined business pain, the result is likely hype — and little value.

- Data quality or availability issues underestimated: Poor, incomplete, or unlabelled data can derail even the best AI model ideas. Data preparation must not be shortcut.

- Lack of clear metrics / “success criteria” — or no go/no-go gate defined: Without measurable objectives and a decision point, PoCs drift into “innovation theatre” — polished demos that never lead to production.

- Organisational misalignment: If only tech/IT is driving the PoC (without business/product/executive buy-in), even a technically successful PoC may not be accepted for further development.

- Trying to do too much: Over-ambitious scope, complex requirements (data, features, scalability) — all in a “PoC” — leads to delays, over-budget effort, and may defeat the purpose. Keep it lean and focused.

When You Should (and Should Not) Do an AI POC

Use an AI POC when:

- You have a clearly defined, high-value business problem that could benefit from AI.

- You either don’t know if the data available is sufficient or you want to test data quality, model feasibility, or integration without big commitment.

- There is organizational willingness — cross-functional stakeholders, budget/time constraints, interest in experimentation but need for validation.

Avoid a PoC (or rethink) when:

- The business problem is vague, or the goal is just “let’s try AI”. In such cases, building a POC often becomes a waste of time.

- There’s no data, or data is so fragmented/dirty that cleaning and preparation is unrealistic within constraints — better to focus first on data infrastructure.

- There’s no commitment beyond the POC stage — no plan or budget to scale even if the PoC succeeds. That often leads to “pilot purgatory.”

A Sample AI POC Decision & Execution Checklist (for Your Team)

| ✅ What to Check / Define | 📌 Why It Matters |

|---|---|

| Clear business problem + measurable goal | Ensures PoC is tied to real value, not just experimentation |

| Defined success metrics / KPIs + go/no-go criteria | Enables objective evaluation, decisions, and prevents drift |

| Data availability & quality check | AI depends heavily on data — ensures feasibility from start |

| Realistic scope, time-boxed (4–8 weeks) & resource allocation | Keeps POC lightweight and avoids “over-engineering” |

| Cross-functional stakeholder alignment & buy-in | Ensures commitment and realistic decision-making |

| Technical/infrastructure feasibility (sandbox, integration plan) | Confirms the PoC is implementable within existing/available stack |

| Plan for documentation & roadmap if PoC succeeds | Helps transition smoothly to pilot/full product if decision is yes |

| Risk & assumption log (data risks, compliance, scaling risk, dependencies) | Avoids surprises and helps governance, especially for AI/data-heavy projects |

Conclusion: Treat AI POC as a Strategic Compass — Not Just a Gadget

An AI POC isn’t about showing off what AI can do. It’s about demonstrating whether AI should be used — for this problem, in this context, with this data, under these constraints.

When done correctly, an AI POC can transform uncertainty into clarity: it gives decision-makers concrete evidence — in weeks, not months — about what works, what doesn’t, and whether the idea is worth scaling.

If you skip the PoC, you risk spending months or years on development — only to discover the AI doesn’t fit the problem, data is poor, or integration is messy. That’s costly in money, time, and organizational trust.

I encourage you to adopt (or adapt) this framework before launching any AI initiative. Think of the PoC as your strategic compass — guiding AI investment, minimizing risk, and maximizing impact.

Additional Resource: